Rapid development in swiftUI for niche problems

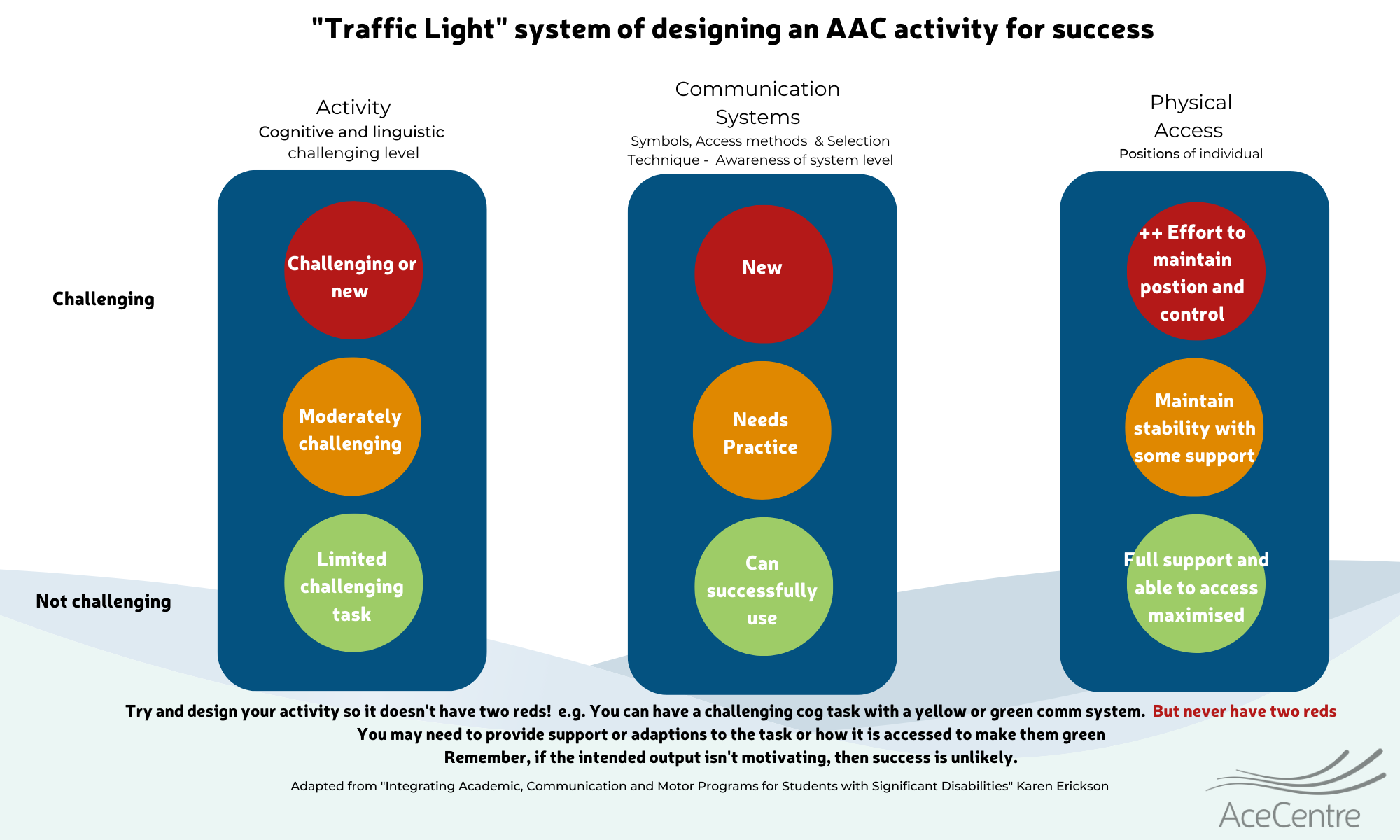

Some clients we see are fantastic with paper-based solutions. But sometimes, finding powered AAC systems which give them more independence is far trickier than you may think. Consider Judith. She doesn’t lift her finger from the paper. This continuous movement is surprisingly not well supported in AAC. Your obvious thoughts are SwiftKey and Swype, but they require a lift-up at the end of a word or somewhere else. Next up, you may try a Keguard or TouchGuide. But then, for some users, this is too much of a change in user interaction. Even if you succeed, you often ask an end user to change the orientation or layout of their paper-based system.. and all in all, it’s just too much change. Abandonment is likely. The paper-based system is just more reliable.

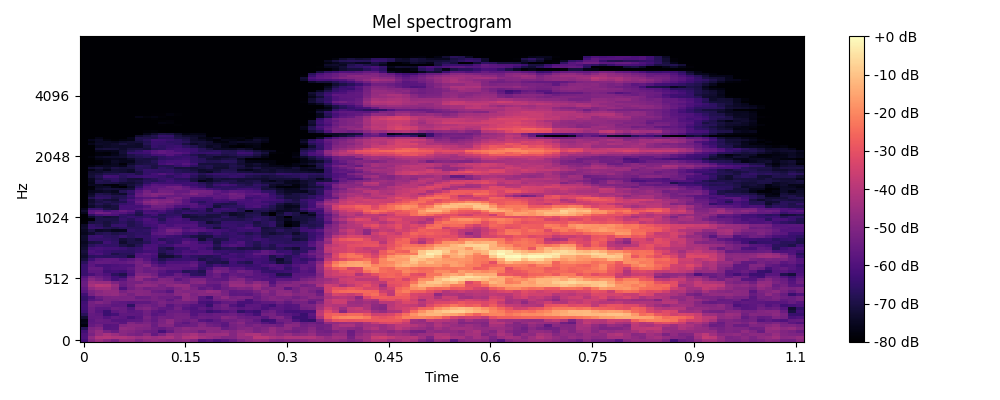

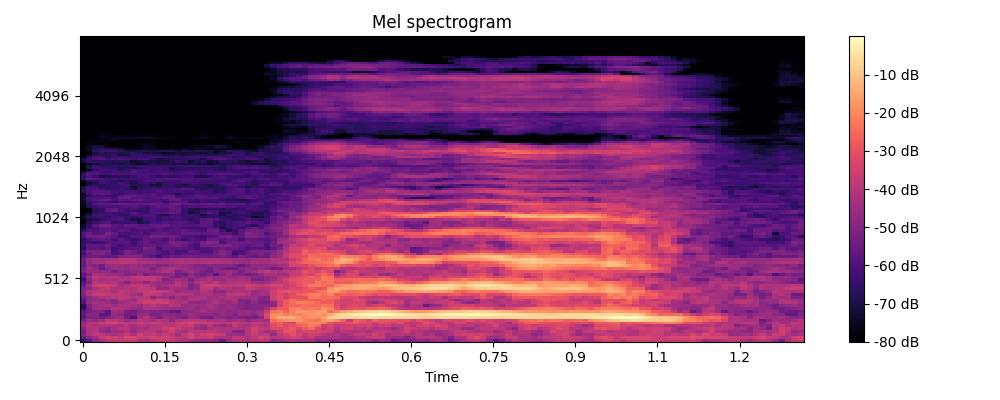

So what do we do? We could look at a bespoke system. But typically, it requires much thought, effort and scoping. That’s still needed, but you can draft something up far quicker these days using ChatGPT. It wrote the whole app after a 2-hour stint of prompt writing. Thats awesome. (Thanks also to Gavin, who tidied up the loose ends). So, this app can be operated by detecting a change in angular direction or detecting a small dwell on each letter. We need to now trial this with our end user and see what’s more likely to work and what’s not and work this up. We may need a way of writing without going to space (something that we see quite a lot), and I can see us implementing a really needed feature, autocorrect. This is all achievable. But for now, we have a working solution to trial a 500 lines of code app made in less than a day’s work.