Iterative rapid development Part 2 (aka Judith Part 2).

So sometimes.. just sometimes.. a couple of months works pays off. Or I guess we could frame this post around successful product development - keeping the end user the focus of your development and involving them at all stages of your development cycle is key. We are proud to shout loudly about this.

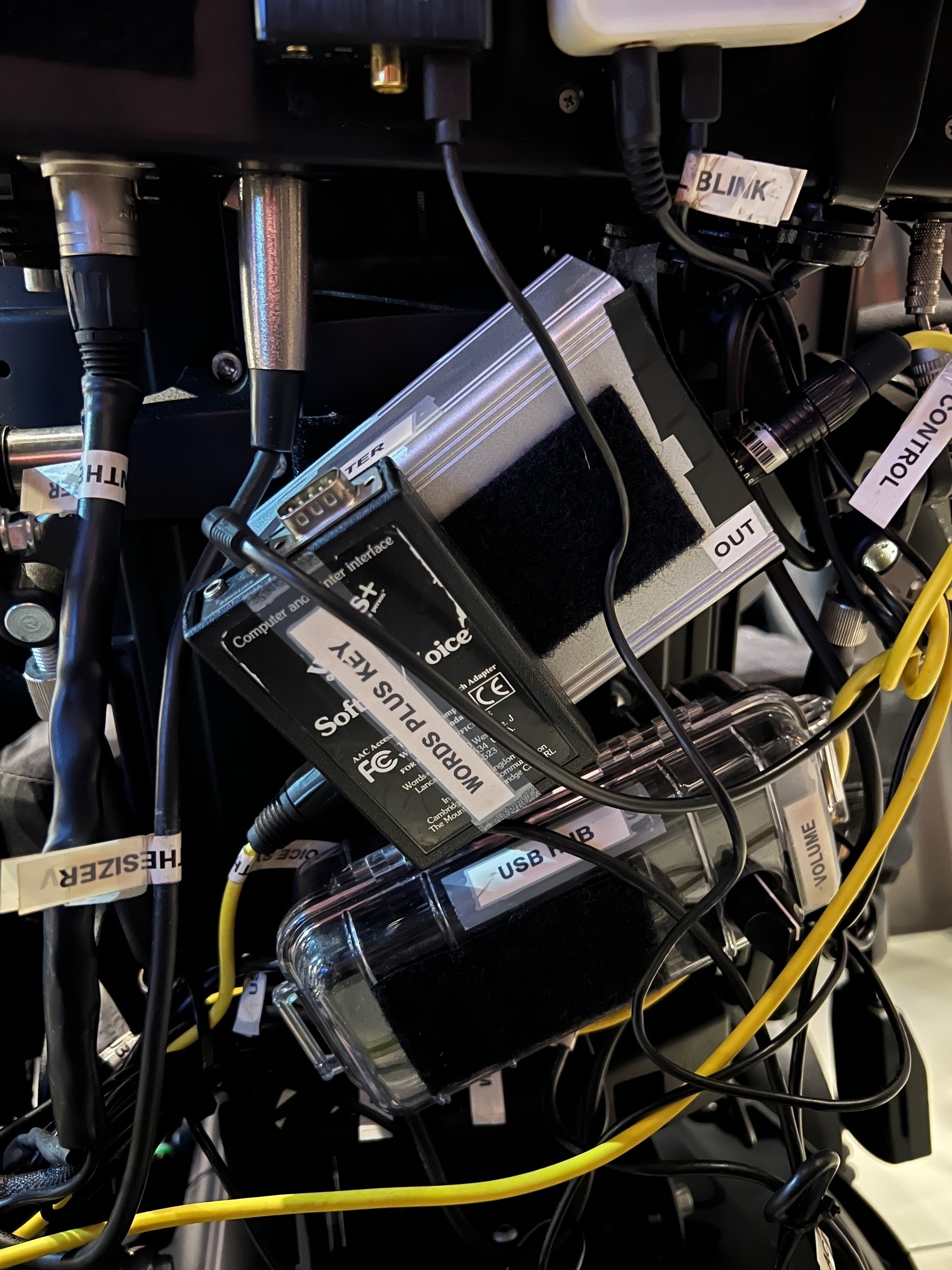

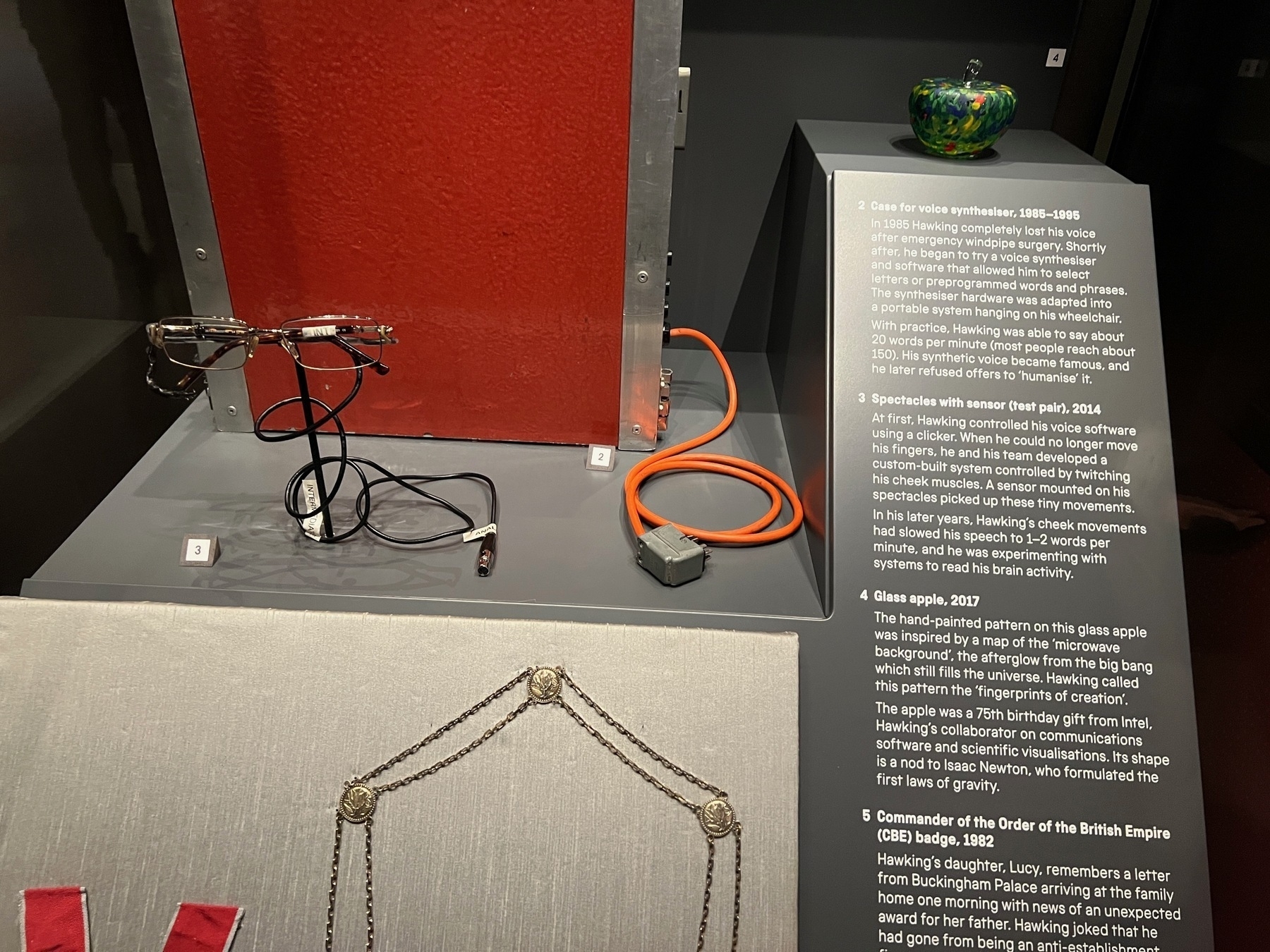

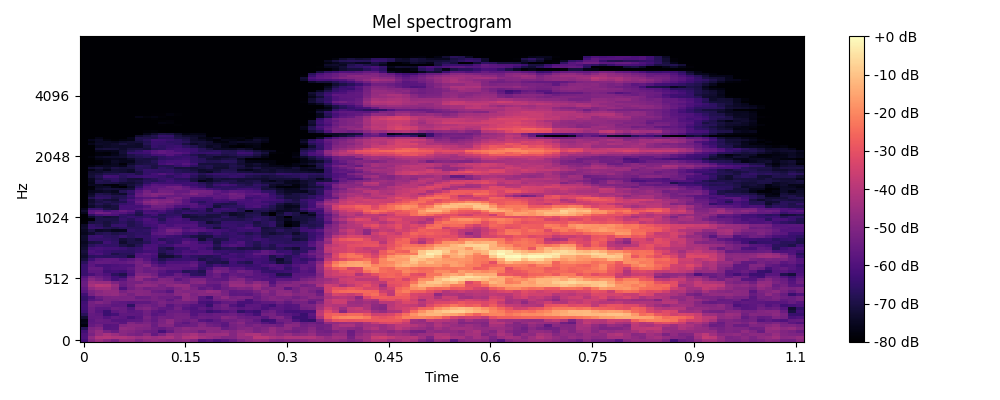

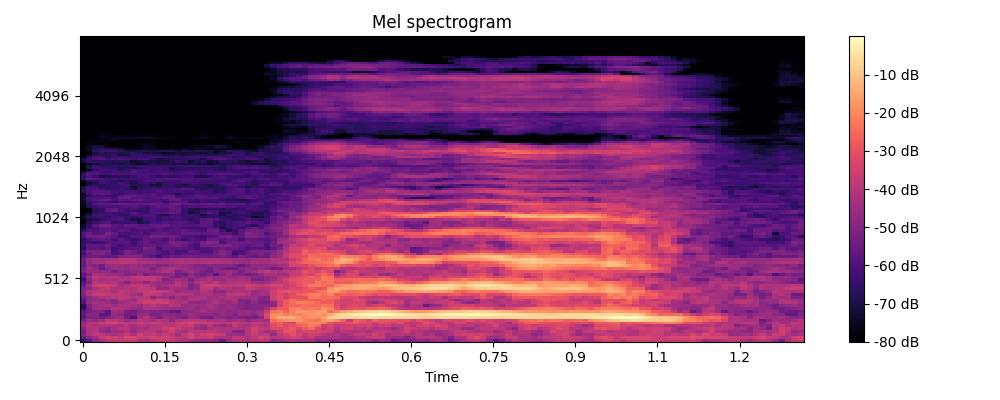

So two months ago we saw Judith. Technology has not been successful for her before. (You can read more why in my previous posts). So we rapidly developed a SwiftUI app that was unique in its operation - you dragged from one letter to the next. Trying it with Judith we still had a problem - positional errors and missing letters were high. So we then rapidly worked on a “correct-a-sentence system” and settled on using a Azure OpenAI API (GPT3.5) and then developed our own custom model alongside this (needing something that worked offline and privacy first was key (NB: The videos is this offline model - Not GPT). A few iterations of this and its correcting as good as GPT 3.5 83% of the time.

So two months later, what does this all mean for the end user? They can now write on technolgy to communicate for the very first time. Happy - well so far yes. But long term AAC use is complex and not just down to the technology. So lets be curious and proud at the same time. There is more to do

- Small tweaks in the UI

- Its correcting a bit too much at times - sometimes adding more grammar changes than I would like - and equally dangerous - losing words. To fix this we could further train our custom model.

and then more I’m sure the next time we review this cycle of the iterative development cycle.

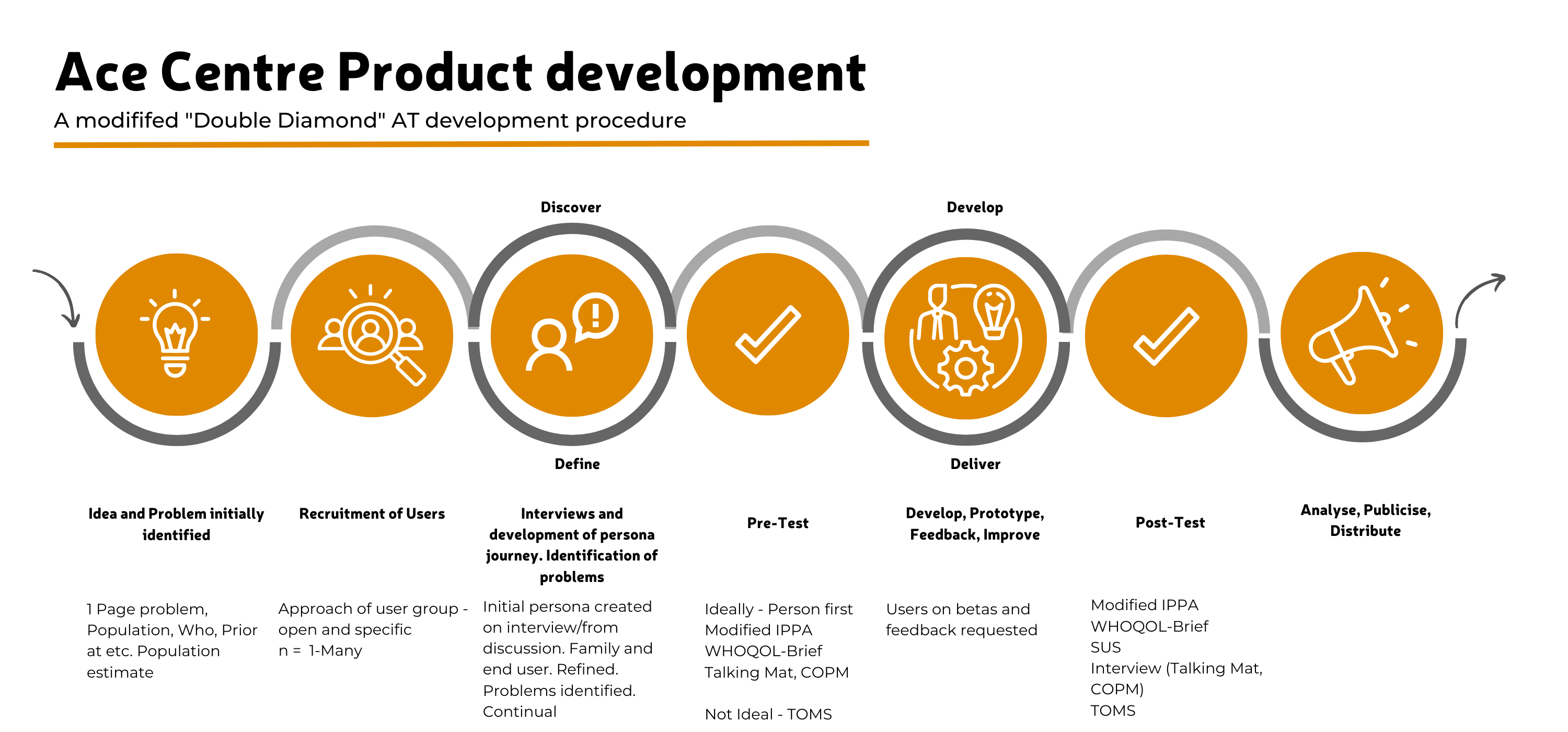

What does this process look like overall?

We follow the double-diamond development cycle. It’s a pretty common approach yet following it is not always easy.

So lessons so far?

- Iterate Fast: Rapid prototyping and adjustments are key to finding viable solutions for complex, unique solutions. There is a much longer tail to development for solid, reliable products but getting somewhere fast helps to see whats important.

- User-Centric Design: Keeping the user at the heart of all stages of development ensures that the technology we develop truly meets their needs. Its not always easy but it can be done. Its important to do this as part of a team though. It can so easily be lost as to whether you are getting carried away or whether you are on track. We pride ourselves on at Ace Centre being a transdisciplinary team where never one person sees the whole picture.

- Continuous Learning: Every challenge presents a learning opportunity, pushing us to constantly improve. And heck - its good fun too seeing big gains.

- Passion and Persistence: A keen interest in making a difference and the drive to keep pushing forward are indispensable.