Winning the Access Award for Echo

Last week we were honoured to win the Samantha Hunnisett Access Award at the CM Awards.

To be honest, I wasn’t expecting it. Most of my attention was on the big-ticket award of the evening — the Lifetime Achievement Award for Alli Gaskin. Alli was an awesome SLT colleague and friend, and she will be deeply missed. (If I did have any clue we were up for it I might have prepared a coherent speech and not drank two bottles of wine before hand. Apologies to all those who heard my garble on)

But fittingly, Alli also had a direct connection to Echo. While working at Lancasterian she supported a child whose communication needs weren’t being met by any existing AAC system. Echo bridged that gap. She immediately recognised its value and became one of its strongest champions. That connection made winning the award all the more meaningful.

Where It Started

Echo itself began initally as “Pasco”. Named by Euan Robertson (Phrase Auditory Scanning Communicator) we had it drafted for a while as we had around 6 or 7 end users who simply weren’t being supported by the auditory scanning options available. But then one of our clients Paul Pickford one day reached out to us. Paul said:

“I’m selling some stuff and I want to donate some money.”

Paul’s donation gave us the chance to act. Using our RICE framework, we prioritised building this tool — knowing it could have a meaningful impact.

The first version was hacked together quickly, but it worked. People like Darren, recovering from a brain stem stroke, were among the first to try it. From the start, Pasco (and then Echo) was shaped directly by the people who needed it.

A Chinese ICU Case

One case that has always stayed with me came from the early days, when Echo was still called Pasco and lived as a web app.

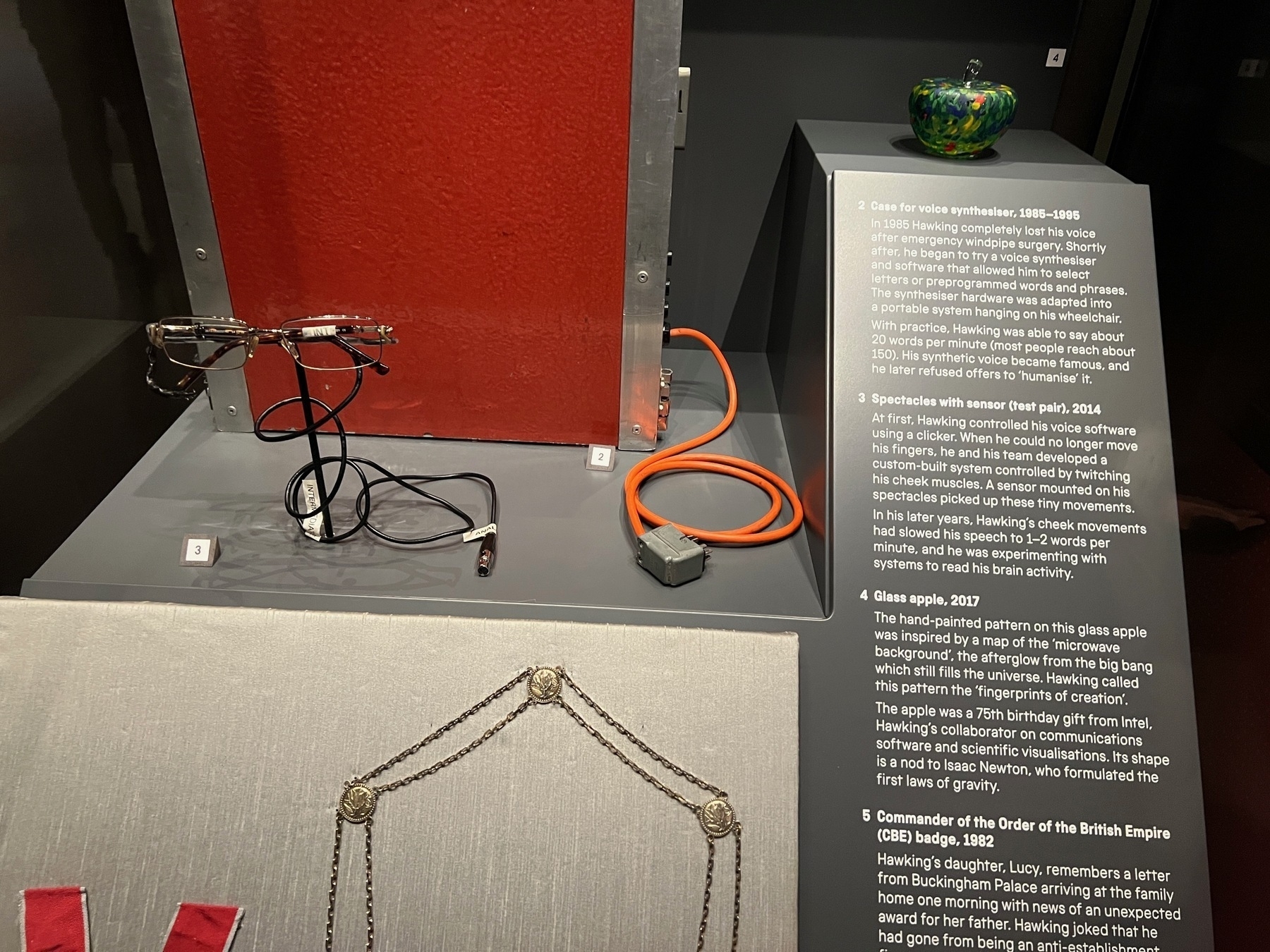

I met a woman in intensive care with significant visual impairment and minimal movement. But the bigger barrier? She was a native Chinese speaker, surrounded by English-speaking staff. Translation tools let staff tell her what was happening, but she had no way to respond.

We added multilingual support into Pasco: staff could cue in English, but she could output in Chinese. For the first time, she could communicate back. Her parents — also Chinese-only speakers — were in tears. I tried explaining with Google Translate, but it butchered my speech so badly I gave up and just demoed it. Seeing her finally communicate was enough to make me realise it was worth it.

Pasco Becomes Echo

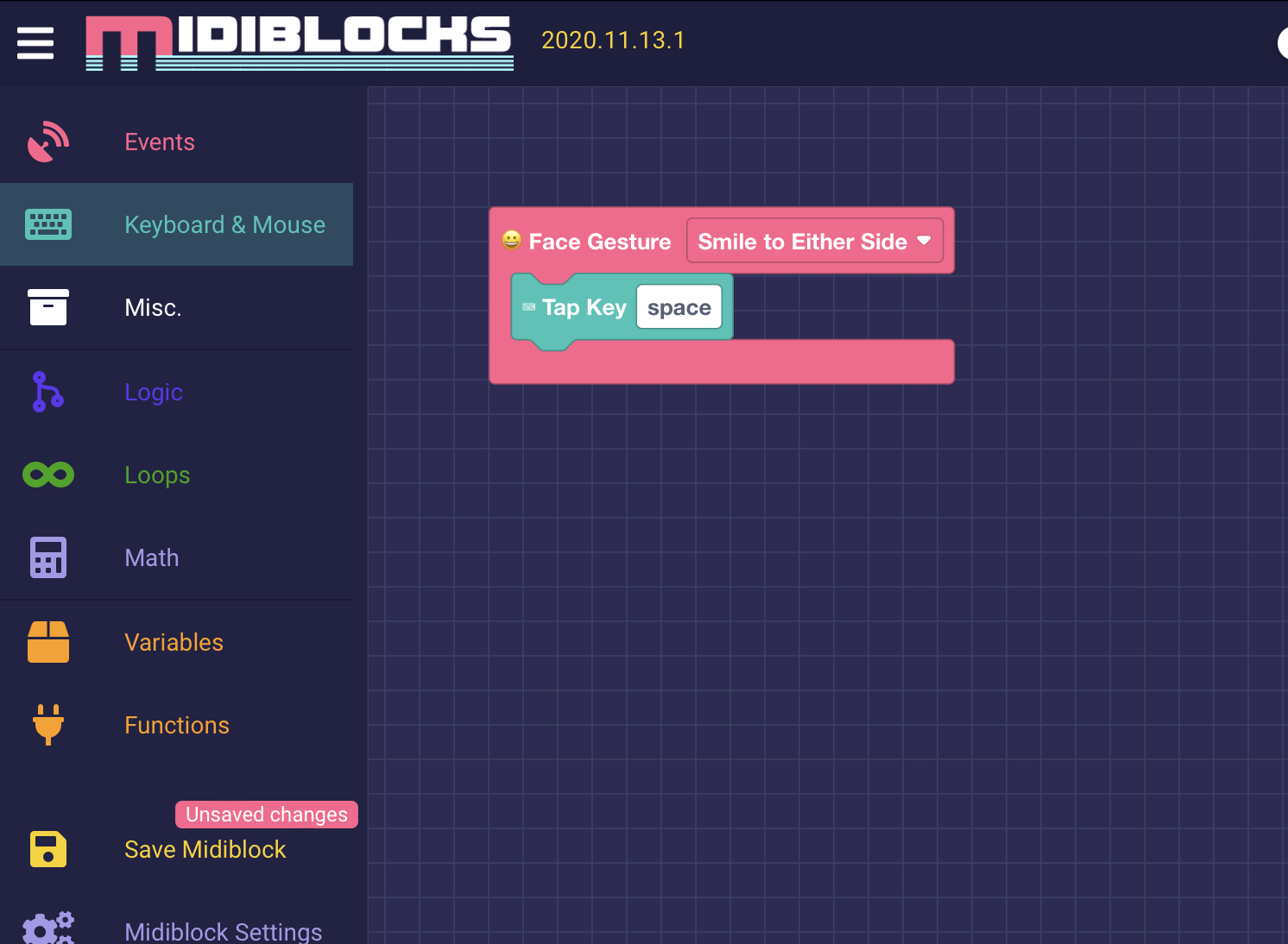

At that stage, Pasco was still rough around the edges. The two-switch scanning had bugs that were difficult to fix. That’s when Gavin Henderson rewrote it in SwiftUI. (Side note: SwiftUI is a dream. I love it.)

That rewrite became Echo.

And then something amazing happened. I was at a conference in Croatia, getting ready to speak, when I noticed a talk being given in another language. I couldn’t follow the words at first, but eventually I realised what was being demonstrated on screen: Pasco. Somehow, it had been picked up and shared across borders, because we had made it open and multilingual. That moment drove home how far-reaching these tools can become when you don’t lock them away.

Open Source and Ongoing Challenges

Echo has always been about more than one app. It’s about showing what’s possible — and letting others build on it. That’s why we’ve kept it open source: 👉 github.com/acecentre…

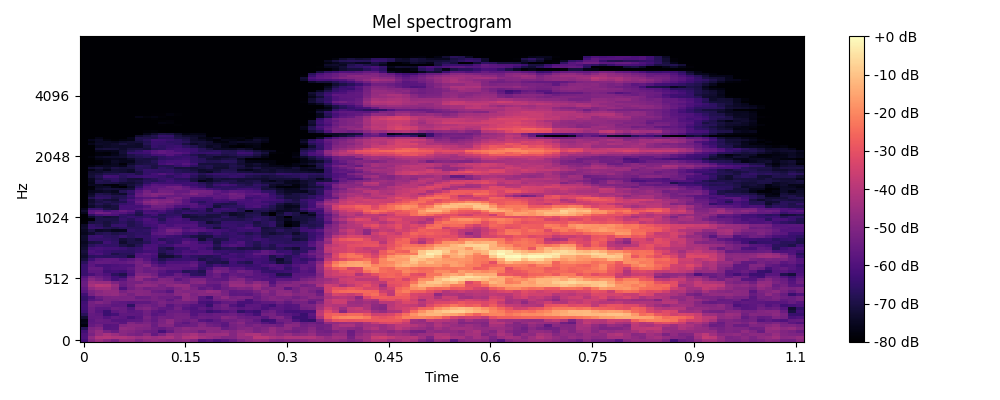

We’ve also learned when to change direction. For example, we once had a clever “auditory splitting” feature — playing different parts of the audio to different devices. It worked, but it was fiddly. And ultimately, Apple’s iOS audio system won’t let us split audio streams the way we need. Until that changes, it’s not practical.

But the point remains: innovation often starts with finding a solution for one person. When shared openly, those solutions ripple outward and benefit many.

A Team Effort

So yes, the Access Award has Echo’s name on it. But really, it belongs to: • Paul Pickford — whose donation started it all. • Hossein Zoda — who built the first version (Pasco). • Gavin Henderson — who rewrote it in SwiftUI and brought Echo to life. • Michael Ritson (Ace Centre) — for deep input on visual impairment and access aspects. • Charlie Danger — who once needed it working with a scroll wheel as input. • And most of all, the end users — Darren, the client in ICU, and many others whose needs shaped the app.

It’s also for the wider Ace Centre team, who foster and curate ideas like this every day by working closely with individuals with disabilities.